Transfer Learning for Small and Different Datasets: Fine-Tuning A Pre-Trained Model Affects Performance

(1) The International School Bangalore, Bangalore, Karnataka, India, (2) Adarsh Palm Retreat, Bangalore, Karnataka, India

https://doi.org/10.59720/20-130

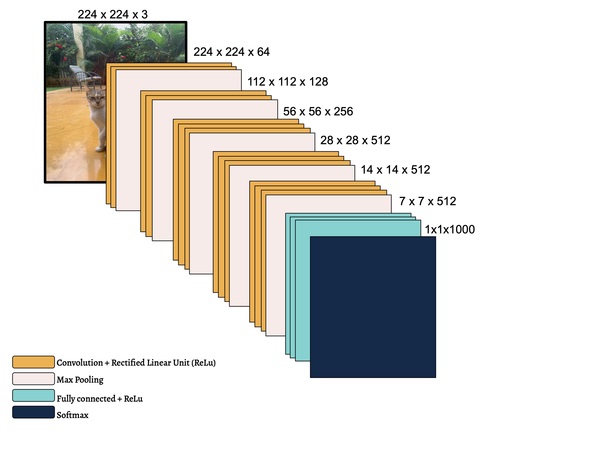

Machine learning and deep learning algorithms are rapidly becoming integrated into everyday life. Whether it is in your face-ID to unlock your phone or the detection of deadly diseases like melanoma, neural networks have been traditionally designed to work in isolation to achieve amazing tasks once thought impossible by computers. However, these algorithms are trained to be able to solve extremely specific tasks. Models have to be rebuilt from scratch once the source and target domains change and the required task changes. Transfer learning is defined as a field that leverages learnings and weights from one task for related tasks. This process is quite smooth if one has enough data and the task is similar to the previous, already learnt task. However, research on when these two conditions are not met is scarce. The purpose of this research is to investigate how fine-tuning a pre-trained image classification model will affect accuracy for a binary image classification task. Image classification is widely used, and when only a small dataset is available, transfer learning becomes an important asset. Convolutional neural networks and the VGG-16 model trained on Imagenet will be used. Through this study, I am investigating whether there are specific trends in how fine-tuning affects accuracy when used for a small dataset which is dissimilar from Imagenet. This will allow for the beginning of investigating quantifiable methods to train a model when using Transfer Learning techniques.

This article has been tagged with: